In recent months, I have seen dozens of projects self-proclaiming to be "ready for AI". This bothered me because it seemed more like a marketing ploy than something grounded. I decided to investigate what it really means for a blockchain to be prepared for artificial intelligence.

The big misconception I realized is thinking that speed solves everything. Many people believe that having millions of transactions per second automatically makes a network compatible with AI. It's like saying that a fast car is good for moving just because it goes fast - it completely ignores the need for cargo space.

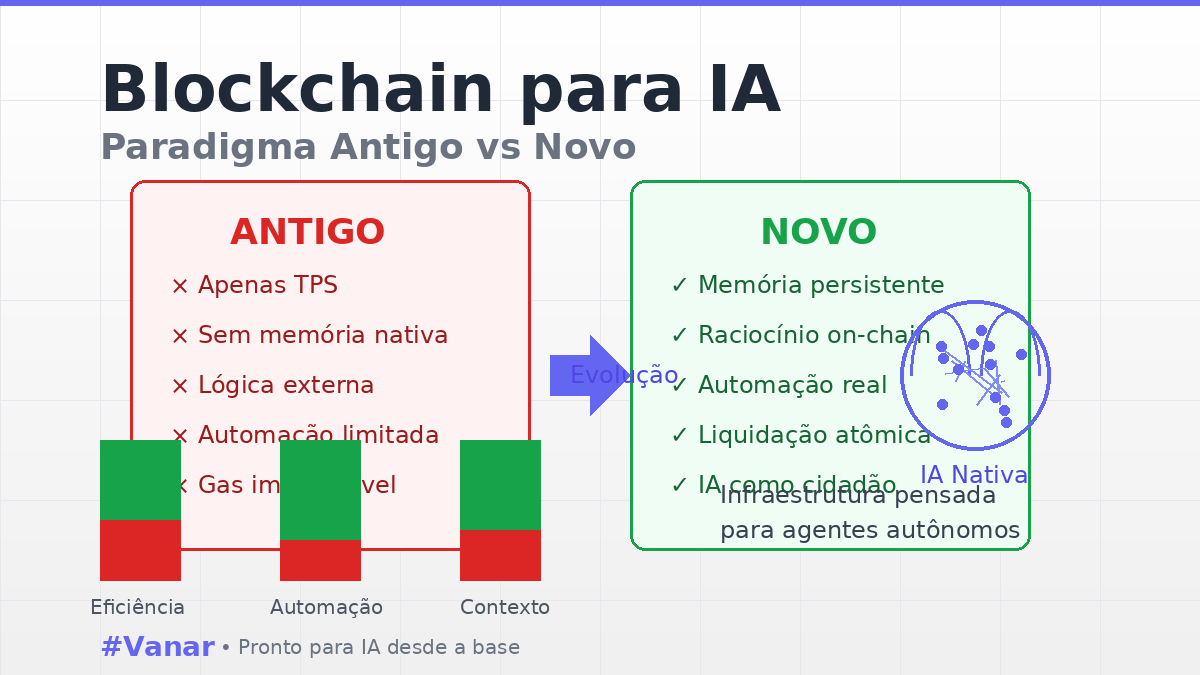

It's high time we stop measuring blockchains solely by TPS. That metric comes from the days when we only processed simple payments. AI agents need more than just speed; they need context, history, and the ability to make decisions based on accumulated data.

Old design assumptions are dangerous. Most blockchains were created with humans making occasional transactions in mind. Autonomous agents operate radically differently, working 24/7, processing complex patterns, and making decisions in milliseconds based on multiple variables.

After studying several technical whitepapers, I identified four essential pillars that AI systems truly need from a blockchain: native memory at the protocol level, on-chain reasoning capability, true automation without human intervention, and instant settlement with cryptographic guarantees.

Native memory allows agents to access complete history without relying on costly external oracles. Reasoning means executing complex logic directly on-chain, not just transferring values. Real automation happens when smart contracts can self-execute based on conditions without anyone pressing buttons. Settlement must be atomic - either everything happens or nothing happens.

When any of these elements are missing, the entire system collapses. I've seen projects try to compensate for lack of memory with centralized databases, completely against the purpose of decentralization. Others rely on external bots for automation, creating single points of failure.

What impressed me when studying @Vanarchain was the infrastructure approach from the ground up. It was not AI added later as a feature - the entire architecture was designed with autonomous agents in mind as first-class citizens on the network.

At the protocol level, there is native support for persistent states that agents can query without prohibitive costs. Execution allows complex logic without hitting artificially low gas limits. The consensus mechanisms have been optimized for continuous usage patterns rather than sporadic spikes.

This transforms $VANRY from a speculative bet into direct exposure to the infrastructure that AI agents will actually use. It’s not about promising the future, it's about having built the right foundations from the start.

The market has yet to properly price the difference between projects that only talk about AI and those that have addressed the fundamental architectural issues. This disparity will not last forever.