There is a quiet shift happening in Web3. For years, crypto rewarded speed, novelty, and narrative velocity. New chains, new primitives, new tokens each promising to redefine the stack. But as capital scales, users diversify, and real-world value begins to touch on-chain systems, the tolerance for failure collapses. In this new phase, infrastructure does not need to be exciting. It needs to be correct.

There is a quiet shift happening in Web3. For years, crypto rewarded speed, novelty, and narrative velocity. New chains, new primitives, new tokens each promising to redefine the stack. But as capital scales, users diversify, and real-world value begins to touch on-chain systems, the tolerance for failure collapses. In this new phase, infrastructure does not need to be exciting. It needs to be correct.

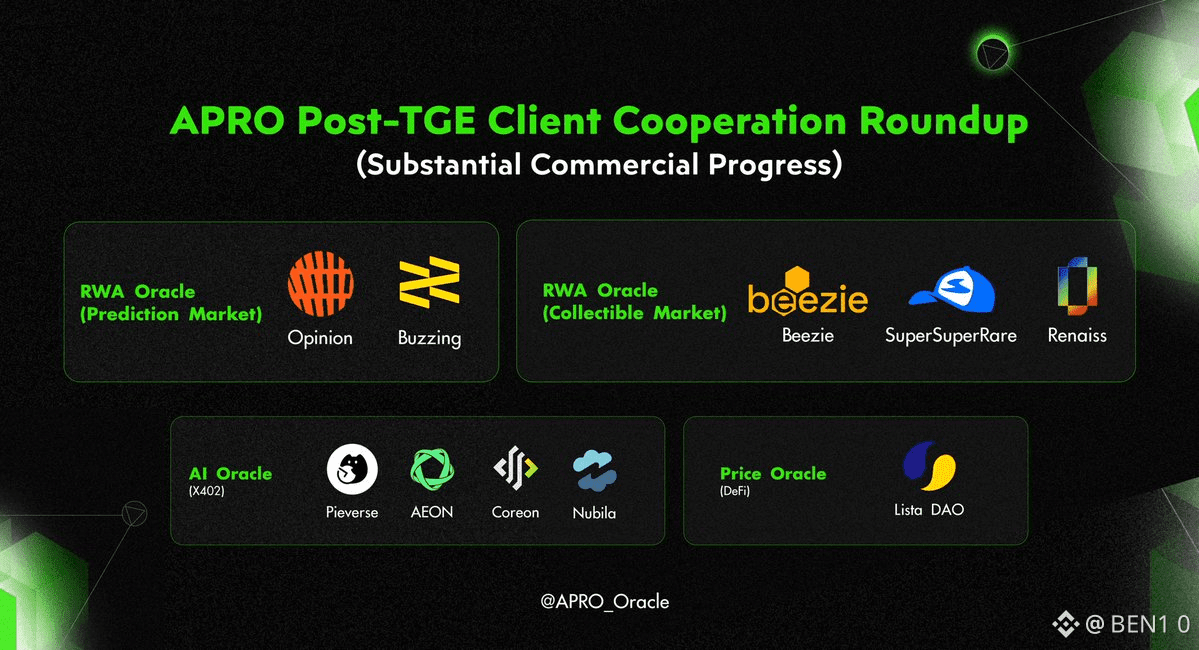

This is where APRO Oracle begins to matter not as a story, but as a structural necessity.

APRO does not present itself as a vision of the future. It behaves like a constraint imposed by reality: when systems grow large enough, they can no longer afford to guess.

When “Trustless” Systems Quietly Depend on Trust

Blockchains were designed to remove trust from execution. They succeed brilliantly at that. But execution is only half the system. Inputs still come from the outside world prices, events, randomness, asset states, and real-world signals that blockchains cannot observe on their own.

This is the uncomfortable truth Web3 has lived with for years:

smart contracts are deterministic, but their inputs are probabilistic.

Oracles sit at this boundary. And when that boundary fails, consequences are not theoretical. Liquidations cascade. Markets desynchronize. Games break. Protocols bleed credibility.

APRO is built with the assumption that this layer is no longer allowed to fail quietly.

Why APRO Feels Like Infrastructure, Not a Product

Most projects optimize for visibility. Infrastructure optimizes for survivability.

APRO’s design choices consistently signal the latter.

It does not force applications into a single data consumption pattern. Instead, it supports two fundamentally different temporal needs:

Data Push, for environments where risk evolves continuously and latency is danger

Data Pull, for environments where precision matters more than frequency

This is not a feature checklist. It is an admission that time behaves differently across applications. Lending protocols, prediction markets, AI agents, and games do not experience risk on the same clock. APRO respects that.

Infrastructure that ignores this distinction eventually breaks under edge cases. APRO is built around them.

Verification as a First-Class Constraint

Most oracle systems assume that decentralization alone neutralizes bad data. History suggests otherwise.

APRO treats verification as a layered process, not a binary outcome.

Off-chain, data is sourced from multiple independent inputs and processed through anomaly detection and cross-validation mechanisms enhanced by AI. This is not about replacing decentralization with intelligence. It is about reducing the probability that obviously wrong data ever reaches consensus.

On-chain, finality remains cryptographic and collective.

The result is not “perfect truth.” It is something more valuable in large systems:

bounded error with traceable accountability.

That is what institutions, serious DeFi, and real-world asset platforms actually need.

Randomness Is Not a Toy Primitive Anymore

Randomness is often discussed as a gaming feature. In reality, it is a governance and fairness primitive.

Auctions, allocations, leader selection, reward distribution, and adversarial games all collapse when randomness can be anticipated or influenced. APRO’s verifiable randomness framework treats entropy as infrastructure, not entertainment.

When outcomes can be proven fair after the fact, systems retain legitimacy even when users lose.

That distinction matters at scale.

Architecture That Assumes Stress, Not Normalcy

APRO’s two-layer architecture is not an optimization trick. It is a failure-containment strategy.

One layer absorbs data complexity, noise, and preprocessing

Another enforces finality and on-chain accountability

This separation introduces fault boundaries, something most Web3 systems still lack. When errors happen and they will APRO is designed so that mistakes do not instantly harden into irreversible on-chain state.

That is how mature systems behave.

Multi-Chain Is Not a Marketing Term Here

Supporting 40+ networks is not impressive by itself. Maintaining consistent guarantees across them is.

APRO does not assume a winner-takes-all chain future. It assumes fragmentation, specialization, and interoperability. By positioning itself as a neutral data layer, it reduces the coordination burden for developers building across ecosystems.

This is infrastructure thinking:

do not force the world to converge design for divergence.

Why This Matters More in the Next Phase of Web3

As Web3 moves closer to real economies RWAs, institutional capital, AI-driven agents, and persistent gaming worlds the cost of being wrong increases faster than the cost of being slow.

Liquidity and speed were moats in earlier cycles.

In the next one, correctness under stress becomes the moat.

APRO is not optimized for virality. It is optimized for the moment when failure is no longer tolerated.

And those are the systems that quietly outlive narratives.

Final Thought

You do not talk about infrastructure when it works.

You talk about it when it is missing.

APRO is building for the moment when on-chain systems stop being allowed to fail casually and start being expected to defend their decisions after the fact.

That is not a story.

That is a responsibility.