Article reprinted from: Machine Heart

Source: Synced

Image source: Generated by Unbounded AI

Text-guided video-to-video (V2V) synthesis has wide applications in various fields, such as short video creation as well as the broader film industry. Diffusion models have revolutionized image-to-image (I2I) synthesis, but face the challenge of maintaining temporal consistency between video frames in video-to-video (V2V) synthesis. Applying I2I models on videos often produces pixel flickering between frames.

To solve this problem, researchers from the University of Texas at Austin and Meta GenAI proposed a new V2V synthesis framework, FlowVid, which jointly utilizes spatial conditions and temporal optical flow clues in the source video. Given an input video and a text prompt, FlowVid can synthesize a temporally consistent video.

Paper address: https://huggingface.co/papers/2312.17681

Project address: https://jeff-liangf.github.io/projects/flowvid/

Overall, FlowVid demonstrates excellent flexibility and can work seamlessly with existing I2I models to complete various modifications, including stylization, object swapping, and local editing. In terms of synthesis efficiency, it only takes 1.5 minutes to generate a 4-second video at 30 FPS and 512×512 resolution, which is 3.1 times, 7.2 times, and 10.5 times faster than CoDeF, Rerender, and TokenFlow, respectively, and ensures the high quality of the synthesized video.

Let’s first look at the synthesis effect, for example, converting the characters in the video into the form of a “Greek sculpture”:

Transform the giant panda eating bamboo into a "Chinese painting" and then replace the giant panda with a koala:

The skipping rope scene can be switched smoothly, and the character can also be changed to Batman:

Method Introduction

Some studies adopt flow to derive pixel correspondences, resulting in a pixel-level map between two frames, which are then used to obtain occlusion masks or construct a canonical image. However, such hard constraints may be problematic if the flow estimate is inaccurate.

FlowVid first edits the first frame using a common I2I model and then propagates these edits to consecutive frames, enabling the model to complete the task of video synthesis.

Specifically, FlowVid performs flow warps from the first frame to subsequent frames. These warped frames will follow the structure of the original frame but contain some occluded regions (marked in gray), as shown in Figure 2 (b).

If flow is used as a hard constraint, such as to inpaint occluded regions, inaccurate estimates will persist. Therefore, this study attempts to introduce additional spatial conditions, such as the depth map in Figure 2 (c), and temporal flow conditions. The joint spatial and temporal conditions will correct the imperfect optical flow, resulting in a consistent result in Figure 2 (d).

The researchers built a video diffusion model based on the inflated spatially controlled I2I model. They trained the model using spatial conditions (such as depth maps) and temporal conditions (flow deformed videos) to predict input videos.

During generation, the researchers adopt an edit-propagate procedure: (1) edit the first frame using the popular I2I model. (2) Propagate the edits throughout the video using our model. The disentangled design allows them to adopt an autoregressive mechanism: the last frame of the current batch can be the first frame of the next batch, enabling them to generate lengthy videos.

Experiments and Results

Detail settings

The researchers used 100k videos from Shutterstock to train the model. For each training video, the researchers sequentially sampled 16 frames with intervals of {2,4,8}, which represent videos of duration {1,2,4} seconds (the FPS of the video is 30). The resolution of all images was set to 512×512 by center cropping. The model was trained with a batch size of 1 on each GPU, using 8 GPUs in total with a total batch size of 8. The experiment used the AdamW optimizer with a learning rate of 1e-5 and 100k iterations.

During the generation process, the researchers first used the trained model to generate keyframes, and then used an off-the-shelf frame interpolation model such as RIFE to generate non-keyframes. By default, 16 keyframes are generated at an interval of 4, which is equivalent to a 2-second clip at 8 FPS. The researchers then interpolated the results to 32 FPS using RIFE. They adopted a classifier-free bootstrapping with a ratio of 7.5 and used 20 inference sampling steps. In addition, the researchers also used a zero signal-to-noise ratio (Zero SNR) noise scheduler. They also fused the self-attention features obtained when performing DDIM inversion on the corresponding keyframes in the input video based on FateZero.

The researchers selected 25 object-centric videos from the public DAVIS dataset, covering humans, animals, etc. For these videos, the researchers manually designed 115 prompts, ranging from stylization to object replacement. In addition, they collected 50 Shutterstock videos and designed 200 prompts for these videos. The researchers conducted qualitative and quantitative comparisons on the above videos.

Qualitative results

In Figure 5, the researchers qualitatively compare our method with several representative methods. When there is a lot of motion in the input video, CoDeF produces output results with noticeable blur, which can be observed in areas such as the man's hand and the tiger's face. Rerender often fails to capture large motions, such as the movement of the paddle in the example on the left. TokenFlow occasionally has difficulty following the prompts, such as turning the man into a pirate in the example on the left. In contrast, our method has advantages in editing ability and video quality.

Quantitative results

The researchers conducted a human evaluation to compare our method with CoDeF, Rerender, and TokenFlow. The researchers showed participants four videos and asked them to find which video had the best quality considering temporal consistency and text alignment. Detailed results are shown in the table. Our method achieved 45.7% preference, outperforming the other three methods. Table 1 also shows the pipeline running time of each method, comparing their running efficiency. Our method (1.5 minutes) is faster than CoDeF (4.6 minutes), Rerender (10.8 minutes), and TokenFlow (15.8 minutes), which are 3.1 times, 7.2 times, and 10.5 times faster, respectively.

Ablation experiment

The researchers studied a combination of the four conditions in Figure 6(a), namely (I) spatial control: e.g., depth map; (II) flow-deformed video: frames warped using optical flow from the first frame; (III) flow occlusion mask indicating which parts are occluded (marked in white); and (IV) the first frame.

Combinations of these conditions are evaluated in Figure 6(b), assessing their effectiveness by comparing their win rates to the full model that includes all four conditions. The win rate for the purely spatial condition is only 9% due to the lack of temporal information. After adding the flow deformed video, the win rate is greatly improved to 38%, highlighting the importance of temporal guidance. The researchers use gray pixels to represent occluded areas, which may be confused with the original gray in the image. To avoid possible confusion, they further add a binary flow occlusion mask to better help the model identify which part is occluded. The win rate is further improved to 42%. Finally, the researchers add the first frame condition to provide better texture guidance, which is particularly useful when the occlusion mask is large and fewer original pixels remain.

The researchers studied two types of spatial conditions in FlowVid: canny edges and depth maps. In the input frame shown in Figure 7 (a), it can be seen from the panda's eyes and mouth that canny edges retain more details than depth maps. The strength of spatial control in turn affects video editing. During the evaluation process, the researchers found that canny edges work better when it is hoped to keep the structure of the input video as much as possible (such as stylization). If the scene changes are large, such as object swaps, and greater editing flexibility is required, the depth map will work better.

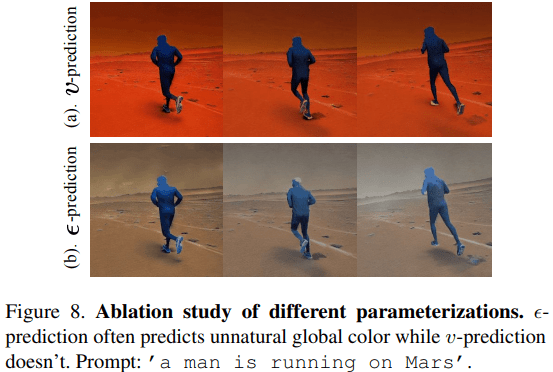

As shown in Figure 8, while ϵ-prediction is commonly used to parameterize diffusion models, researchers found that it may have unnatural global color shifts across frames. Although both methods use the same flow-deformed video, ϵ-prediction introduces unnatural grayscale colors. This phenomenon is also found in image-to-video.

Limitations

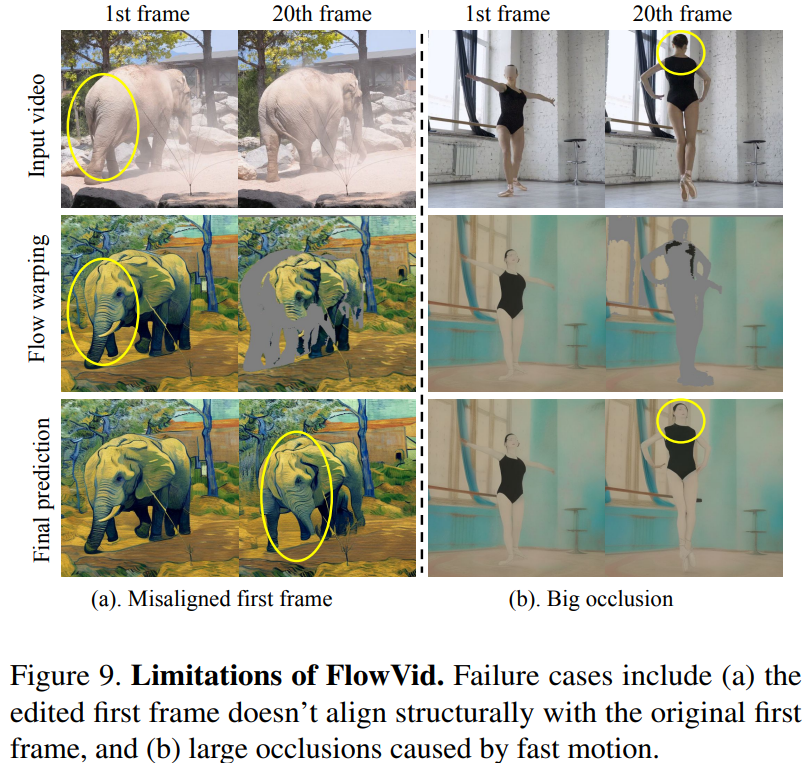

Although FlowVid achieves significant performance, there are some limitations. First, FlowVid relies heavily on the generation of the first frame, which should be structurally consistent with the input frame. As shown in Figure 9(a), the first edited frame identifies the elephant's hind legs as the front trunk. Wrong noses will be propagated to the next frame, resulting in suboptimal final predictions. The second is when the camera or object moves so fast that a large area of occlusion occurs. In this case, FlowVid guesses the missing areas and even hallucinates them. As shown in Figure 9(b), when the ballet dancer turns her body and head, the entire body part is obscured. FlowVid managed to get rid of the clothes, but it turned the back of the head into the front, which would have been horrifying if shown on video.

For more details, please refer to the original paper.