I came into VANAR expecting the usual “chain thesis,” because that’s how most people still frame it. But the longer I sat with what they’ve actually shipped and what they’re explicitly building toward, the more that framing felt backwards. VANAR reads less like an ecosystem begging developers to invent use cases, and more like a product stack that happens to settle on a chain they control.

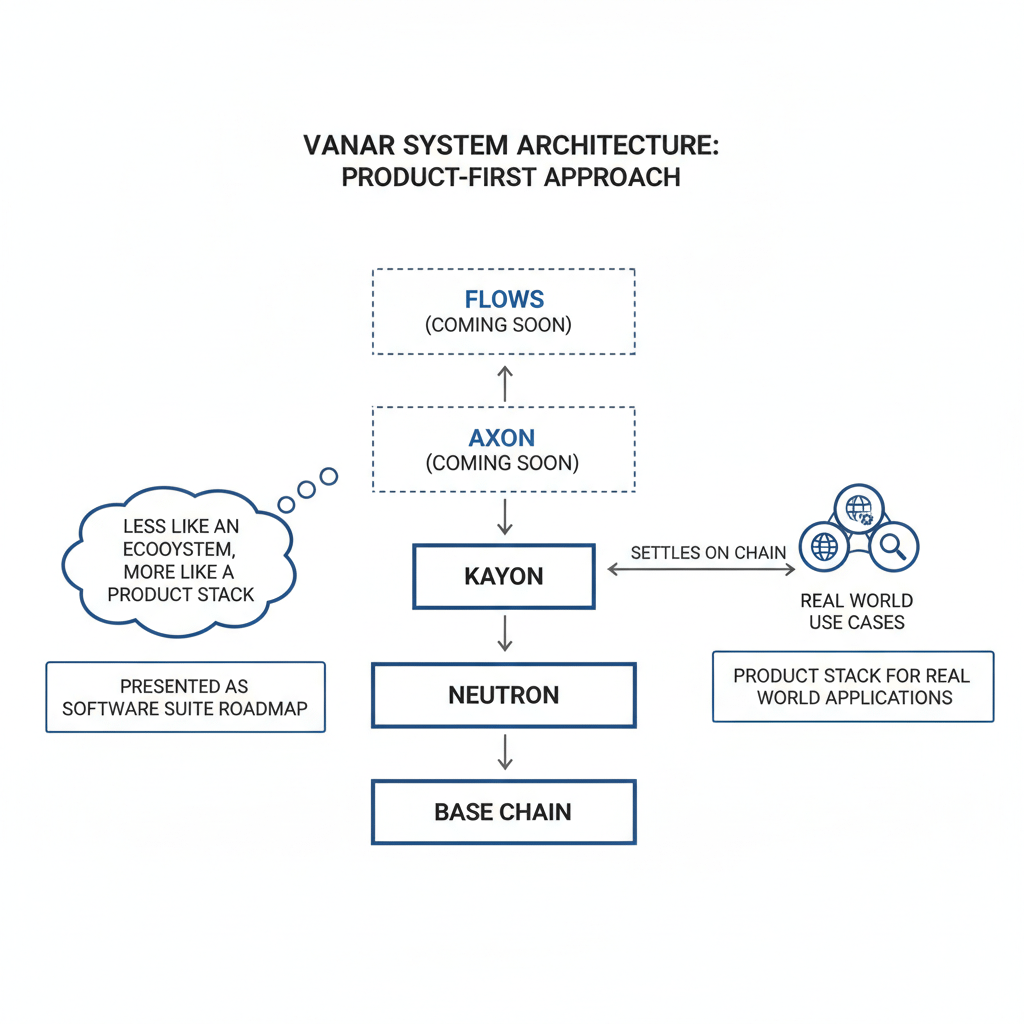

The cleanest signal is how they lay the stack out themselves: base chain first, then Neutron, then Kayon, then two layers they still label as “coming soon” (Axon and Flows). It’s presented like a software suite roadmap, not a token pitch.

That’s where the “looks like Web2” part starts making sense. In Web2, nobody sells “a database” as the end goal. They sell systems that make data usable: stored, searchable, portable, and capable of driving workflows. VANAR is trying to do something structurally similar, except they want the storage and the proofs to live inside the chain rather than in a company’s private infrastructure. You can agree or disagree with whether the chain needs to exist at all—but the shape of the product strategy is noticeably different from most L1 stories.

On the base layer, VANAR’s older documentation is pretty direct about what they think matters for applications: stable low fees and block times that don’t make interactive products feel sluggish. Their whitepaper frames a “fixed-fee” design goal (fixed relative to dollar value) and repeats the tiny-fee target that’s meant to keep transaction costs predictable for high-frequency usage. The point isn’t novelty. The point is removing friction so the layers above can behave like normal software, where users don’t have to think about cost every time they click.

If you’re doing diligence, you still have to ask whether the chain is actually used or just described. VANAR’s own explorer shows a large running total of transactions and addresses (numbers that are far too big to ignore, even if you remain skeptical about what portion is organic). Those totals don’t prove product-market fit, but they do at least establish that the network is alive, producing blocks, and carrying volume over time rather than sitting idle.

Where VANAR becomes genuinely interesting is Neutron, because it’s not positioned as “storage” in the lazy sense. They describe it as a compression and restructuring system that turns raw files into compact “Seeds” that are meant to be stored directly onchain and later queried like active memory, not treated like dead blobs. The headline claim they repeat is aggressive: compressing something like 25MB into about 50KB using “semantic, heuristic, and algorithmic layers.”

If you’re serious about research, that number should not be taken as truth just because it’s printed on a landing page. It should be treated like a test case: what kinds of data compress that well, how consistent is it, what’s lost, and what does “verifiable” mean for a transformed object? But even with skepticism, I think the direction is clear. They’re trying to turn “data” into a reusable primitive that can be carried across workflows and applications without being trapped in a single vendor’s database.

This is also why myNeutron matters more than people give it credit for. It isn’t just “an app” bolted onto a chain. It’s a distribution wedge. The product is framed as a personal knowledge base where you can capture pages, files, notes, and prior work, and then reuse that context without rebuilding it from scratch each time. If VANAR can get real users to treat this kind of memory layer as a daily utility, the chain stops being an abstract infrastructure bet and starts being the rails underneath a habit.

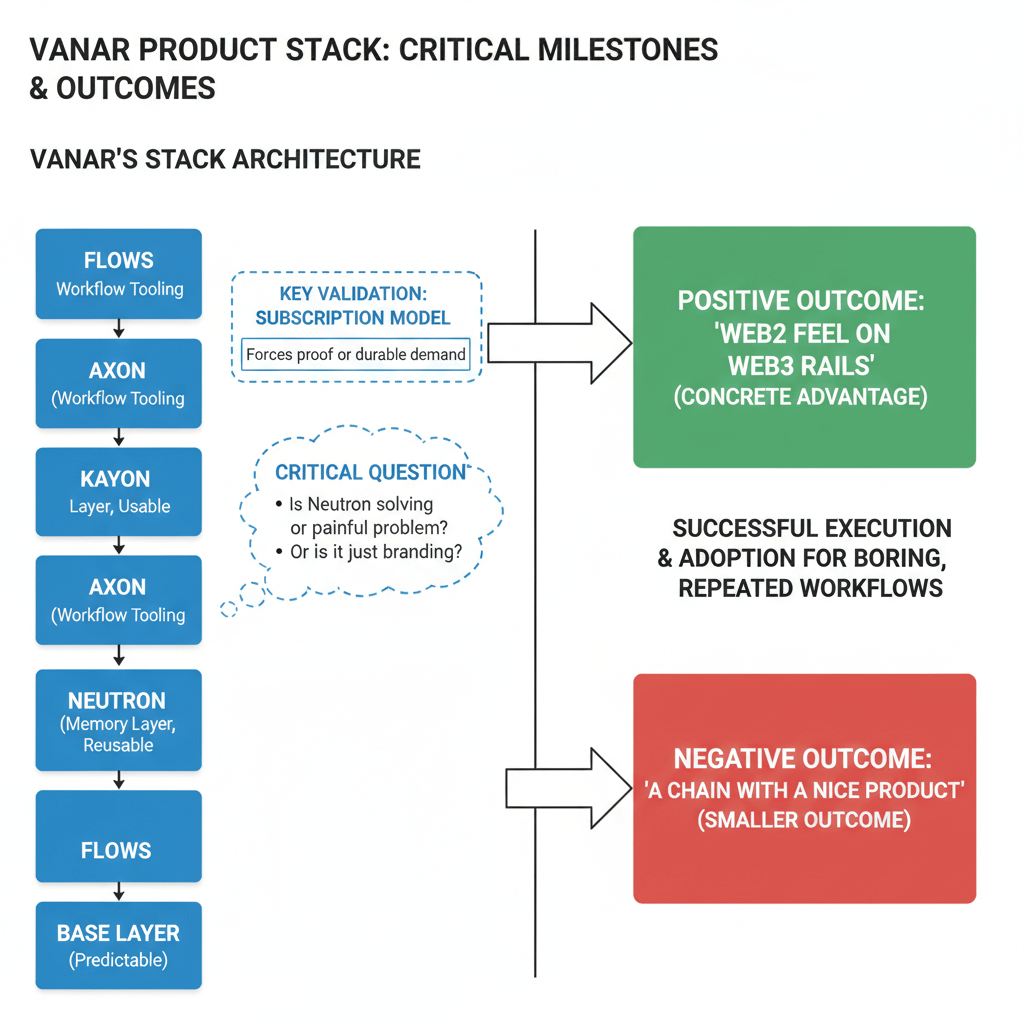

And I’m watching one specific detail here: the move toward monetization. CoinMarketCap’s VANAR updates page explicitly mentions an “AI Tool Subscription Model (2026)” around moving products like myNeutron to a paid model, with the stated intent of creating sustainable onchain demand. That’s a very different tone from the typical crypto playbook of “incentives forever.” Charging money is uncomfortable, but it’s also a sign someone is at least trying to prove that the product can stand on its own economics.

Once you accept the idea that Neutron is meant to be memory, Kayon is easier to interpret. VANAR positions Kayon as the reasoning layer that sits on top of those Seeds and enterprise data, turning stored context into insights and workflows that can be traced and checked rather than treated like black-box outputs. I’m not here to repeat generic “AI + blockchain” claims. What matters is the architectural separation: memory as a base primitive, reasoning as a layer above it. In practice, that’s how durable software systems evolve—one layer stabilizes, then another layer makes it useful at scale.

Still, Kayon is also where I’d press hardest as an investor. “Auditable” can mean two very different things: it can mean “we log what happened,” or it can mean “third parties can independently verify key steps and inputs.” VANAR’s public descriptions lean into the stronger interpretation. The diligence question is whether the implementation lives up to that under messy real-world conditions, and whether builders outside VANAR can rely on it without custom handholding.

Now, the top of the stack is where the thesis either becomes real or stays a nice diagram. Axon and Flows are still explicitly framed as upcoming layers, and VANAR’s own pages treat them as “coming soon.” Independent writeups in late January 2026 describe Axon as an “agent-ready” contract system and Flows as a toolkit for automated onchain workflows. That description is exactly the kind of thing that can sound impressive and still fail if execution slips or the developer experience is clumsy.

But it’s also the missing piece if you’re trying to understand the “Web2 product stack” comparison. In Web2, the leap from “we store data” to “teams run their business on this” is workflow. Orchestration. Automation. The boring glue that turns a tool into an operating layer. If VANAR ships Flows in a way that actually lets teams define multi-step processes reliably, then Neutron and Kayon become more than clever features—they become the memory and reasoning foundation that workflows can build on.

One thing I appreciate is that VANAR seems to be documenting the story in a way that’s consistent across multiple touchpoints, not just one glossy page. Their blog index shows frequent posts around the memory API and building their “intelligence layer” narrative through early February 2026. That doesn’t guarantee substance, but consistency matters. Projects that are improvising tend to contradict themselves across pages. Here, the same structure keeps showing up: memory → reasoning → orchestration → apps.

At the same time, I’m careful about what I treat as strong evidence. A lot of third-party “analysis” content is basically commentary repackaged as research. I don’t overweight it. I use it only to cross-check whether the market is picking up the same signals VANAR is pushing and to identify what claims are being repeated. For example, multiple recent posts echo the same Neutron compression claims and the same roadmap framing, but those are still downstream of VANAR’s own messaging. That’s not independent validation; it’s confirmation that the narrative is spreading.

So what’s my actual investor read?

I think VANAR is making a bet that the next wave of crypto usage won’t be driven by “more dApps,” but by better primitives for memory, context, and workflow—things that make software feel coherent across time, not just across wallets. Neutron is the attempt to make data compact and reusable onchain. myNeutron is the attempt to turn that into a user habit. Kayon is the attempt to make that memory actionable without losing traceability. Axon and Flows are the attempt to make it all composable into real processes rather than isolated features.

What I don’t think is earned yet is the final step: proof that the stack drives durable demand that isn’t cosmetic. The explorer totals show activity, but they don’t tell you whether people are using Neutron because it solves a painful problem, or because a campaign pushed transactions through the pipe. The subscription move, if it actually happens at scale, would be a meaningful milestone precisely because it forces that question into the open.

That’s why my conclusion is more conditional than celebratory.

VANAR is not interesting to me because it claims to be “AI-native” or because it uses new labels for old ideas. It’s interesting because it’s trying to build a stack the way software companies build stacks: start with a base layer that behaves predictably, add a memory layer that’s reusable, add a reasoning layer that’s usable, then ship workflow tooling that lets other teams build without reinventing plumbing. If they execute the top layers and builders adopt them for boring, repeated workflows, the “Web2 feel on Web3 rails” becomes a concrete advantage rather than a slogan. If Axon and Flows don’t land, or if Neutron ends up being more branding than primitive, then the thesis compresses down into “a chain with a nice product,” and that’s a much smaller outcome.