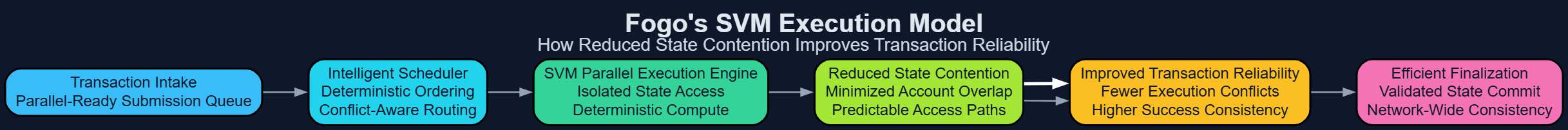

When I look at how transactions behave on Fogo, what stands out is not just raw speed but how the SVM execution model quietly changes the way state contention is handled. On many networks, transactions compete for shared state in ways that create invisible queues, and when demand rises those queues turn into unpredictable delays. Fogo being a fast L1 based on the Solana Virtual Machine, deals with this issue from the execution layer itself. Structuring transactions on explicit state access and promoting minimal overlap designs not only makes the system less prone to conflicts that can be congestion but also this design choice directly influences how reliable the transaction processing feels in practice.

What becomes interesting is how this plays out under real activity. When multiple DeFi interactions or game actions hit the network at the same time, the SVM model on Fogo allows many of them to proceed without blocking each other, as long as they touch separate parts of state. Instead of forcing everything into a single sequential lane, the execution environment can process independent operations concurrently. The immediate effect is not only higher throughput but a noticeable reduction in transaction collisions. Users experience fewer unexpected stalls, and confirmations arrive with a steadier rhythm. That steadiness matters more than headline performance numbers because it shapes whether the network feels dependable during busy periods.

From a developer perspective, reduced state contention changes how applications are designed. Builders working on Fogo are incentivized to think carefully about how their programs access and organize state, because well-structured contracts benefit directly from the SVM's ability to run operations in parallel. Applications that separate concerns and avoid unnecessary shared bottlenecks tend to scale more gracefully. Over time, this encourages an ecosystem style where performance is not an afterthought but part of the architectural mindset. The cause is the execution model’s preference for explicit state management; the effect is a developer culture that treats scalability as a design constraint from day one.

There is also a subtle reliability advantage that emerges from this structure. When contention is reduced at the execution layer, the network spends less effort resolving conflicts and reordering heavy queues of competing transactions. That translates into more predictable settlement behavior. Rather than switching back and forth between fast and slow phases on the basis of demand bursts only, Fogo can keep a more normal processing rhythm. To those running financial apps or live systems, reliability is generally worth more than instant occasional peaks in performance. It lets one set up tactics and work procedures on the basis of a predictable pattern instead of continually changing to the ebb and flow of congestion.

Another practical consequence appears in how complex multi-step interactions behave. Workflows that involve several dependent transactions benefit from an environment where unrelated activity is less likely to interfere. On Fogo, the SVM's handling of state access helps isolate independent operations, so one application's surge in activity is less likely to cascade into delays for others that operate on different state domains. This separation does not eliminate competition entirely, but it narrows the situations in which unrelated actions become entangled. The observable outcome is a network that feels more compartmentalized and resilient when diverse applications run simultaneously.

All of this reinforces a broader point about execution design on Fogo. By basing its design around the SVM's explicit state model, the chain makes the choice of SVM at a low technical level into a property that is visible to a user: transaction reliability under load. Reduced contention is not a theoretical optimization; it is a mechanism that influences confirmation timing, application responsiveness, and the trust developers gain when they deploy performance, sensitive systems. When activity increases and more applications use the same environment, the advantages multiply, as the execution layer keeps on giving higher results to the designs that work together with its concurrency model.

The end result is a system in which both pure performance and real, world usability start to come together. Transactions that do not share bottlenecks with others can proceed in the system with barely any interference, and the network overall is getting less time to heal from congestion caused by itself. For the users, it gets manifested as more seamless interaction patterns. For developers, it appears as a platform where careful state design is consistently paid back with stable execution. By reducing contention at its core, Fogo demonstrates how an execution model can influence not just speed metrics but the everyday reliability that determines whether a high-performance L1 feels trustworthy in real use.