The current collaboration of AI agents is entering a complex new phase: it's no longer a single AI serving you, but multiple AIs automatically collaborating to form a 'task contracting chain.'

You issue a command, AI A will decompose it and delegate it to B, C, D, E for execution, and they may continue to subcontract further. It resembles a sophisticated supply chain in the real world, but faster and more covert.

Thus, a more pressing question than 'Is AI intelligent' emerges: Responsibility.

Task failure, who bears the loss?

Where did the error occur?

Was there an abuse of authority?

How will funds be returned?

How to audit the entire process?

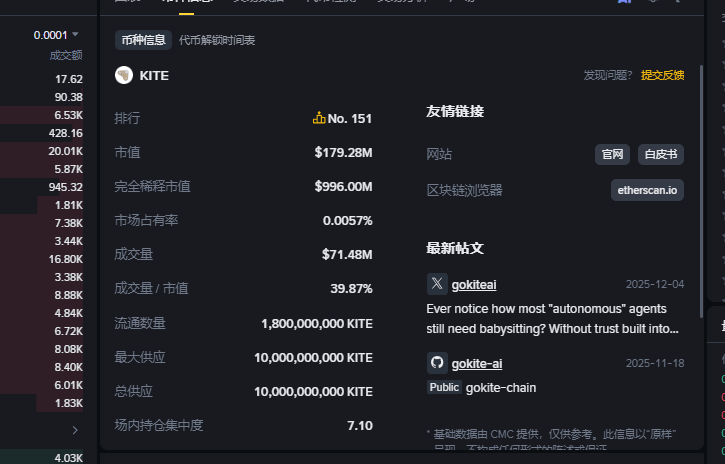

If these issues cannot be resolved, large-scale AI economy is out of the question. In my view, Kite is quietly constructing the underlying logic of this future responsibility system.

Why can't centralized systems support AI collaboration?

Current cloud services or centralized platforms have three fatal flaws:

Process black box: only seeing task results, unable to audit the collaboration details between AIs.

Lack of credibility: records are controlled by a single entity and cannot serve as a trust foundation across companies and ecosystems.

Responsibility cannot be transferred: once a task is subcontracted, responsibility is out of the control of the original system.

Therefore, the 'responsibility chain' of future AI collaboration must be built on the blockchain. The chain itself provides immutable records, verifiable evidence, and programmable automatic execution capabilities.

But not all public chains are suitable. It requires fine-grained identity, permissions, behavior auditing, and payment settlement layers - which is exactly what Kite is building.

Kite's 'passport' system: the first anchor point of responsibility.

Each AI agent on Kite has a unique 'digital passport.' This is not only an identity marker but also the starting point of responsibility.

It clearly defines:

Who does this AI represent?

What are its permission boundaries? (For example: a maximum expenditure of $500 per month.)

Can its behavior be traced?

When a task goes wrong, accountability is no longer vaguely stated as 'the AI has a problem,' but can be precisely pinpointed to 'a specific AI with a certain ID, operating under specific permissions, executed an erroneous operation.' This makes responsibility clear and specific.

Modular design: the 'scalpel' that dissects the responsibility chain.

Kite's modular system is essentially a parser for the responsibility chain. Each module bears specific responsibility nodes:

Risk control module: assessing task risks.

Audit module: recording each step of the execution sequence.

Budget module: strictly controlling expenditure.

Verification module: verifying the reliability of external APIs.

When a complex task flows through multiple modules, each module leaves its own 'responsibility fragment.' Ultimately, all fragments connect to form a complete, verifiable responsibility trajectory. This provides a solid data foundation for subsequent tracing and accountability.

Stablecoin settlement: ensuring that responsibilities have definite economic consequences.

Responsibility cannot remain verbal; it must be linked to economic consequences. The adoption of stablecoins as the settlement layer by Kite is crucial.

This ensures that:

Which AI or service provider should bear the loss and process refunds can be executed automatically and accurately.

The amount of compensation is fixed and not affected by token price fluctuations.

The entire execution process of economic responsibility is transparent and indisputable.

Only when the economic closed loop is established can the responsibility system be truly implemented.

Future vision: from the responsibility chain to the 'responsibility map.'

We can imagine that future AI collaboration will generate a complex 'responsibility map':

Each AI is a node in the graph.

Each task delegation is a connecting line.

Each flow of funds is a weight.

Each failure point will leave a mark on the graph.

This verifiable and auditable map will become the core basis for corporate collaboration, compliance review, and dispute resolution. Kite's protocol layer is providing the fundamental 'data points' and 'accounting' capabilities for generating this map.

Conclusion: Kite is building the 'foundation of trust' for the era of automation.

The evolution of AI capabilities is only the first half; establishing a clear and reliable responsibility system is the second half. When countless AIs begin to interact autonomously and handle value, what the world needs is not smarter algorithms, but more trustworthy responsibility infrastructure.

What Kite is building is a verifiable responsibility system that binds identity, permissions, behavior, and payments together. It may not be as eye-catching as the application layer, but it is laying the most critical trust tracks for the upcoming automated economy driven by billions of AI agents.

This is not just a technical upgrade; it is a fundamental transformation of production relations.

Note: This article explores technological concepts and industry trends and does not constitute any investment advice. The combination of AI and blockchain is still a frontier exploration with high uncertainty; please view it rationally.