I was late to a call once because my laptop decided today was the day to “Update.” Not crash. Not die. Just… take control. Spinner, reboot, fan noise, regret. The funny part? The meeting was about speed. Low latency. Real-time systems. And there I was, held hostage by a background task I didn’t ask for.

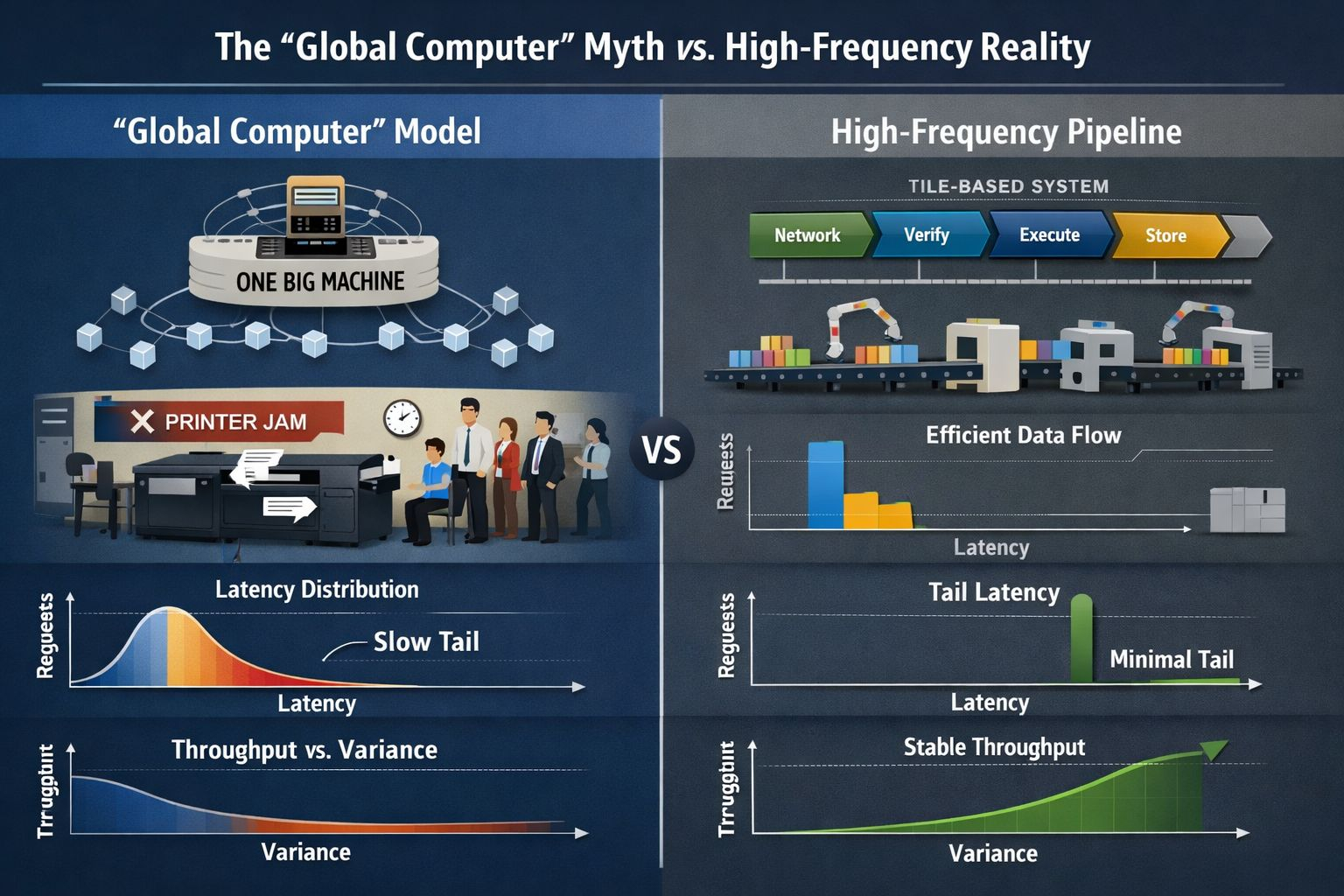

That’s the same vibe you get when people call a blockchain a “global computer.” Sounds clean. One big machine. You send work in, it runs, you get results.

In practice, it’s more like a messy office where one slow printer jams and suddenly the whole floor is waiting. If you want high-frequency apps, that model breaks.

Not because crypto is cursed. Because computers have rules. Physics has rules. And coordination across thousands of nodes has… brutal rules.

Fogo’s vision is basically this: stop pretending the chain is a single computer. Treat it like a high-speed data plant.

A pipeline. Something you engineer for steady flow. Minimal drama. Consistent rather than theoretical.

High-frequency doesn’t mean “trading bots only.” It means anything where timing is the product.

Games that can’t stutter. Payments that can’t hang. Order books that can’t “finalize later.” If the system hiccups, users don’t write a thinkpiece. They leave.

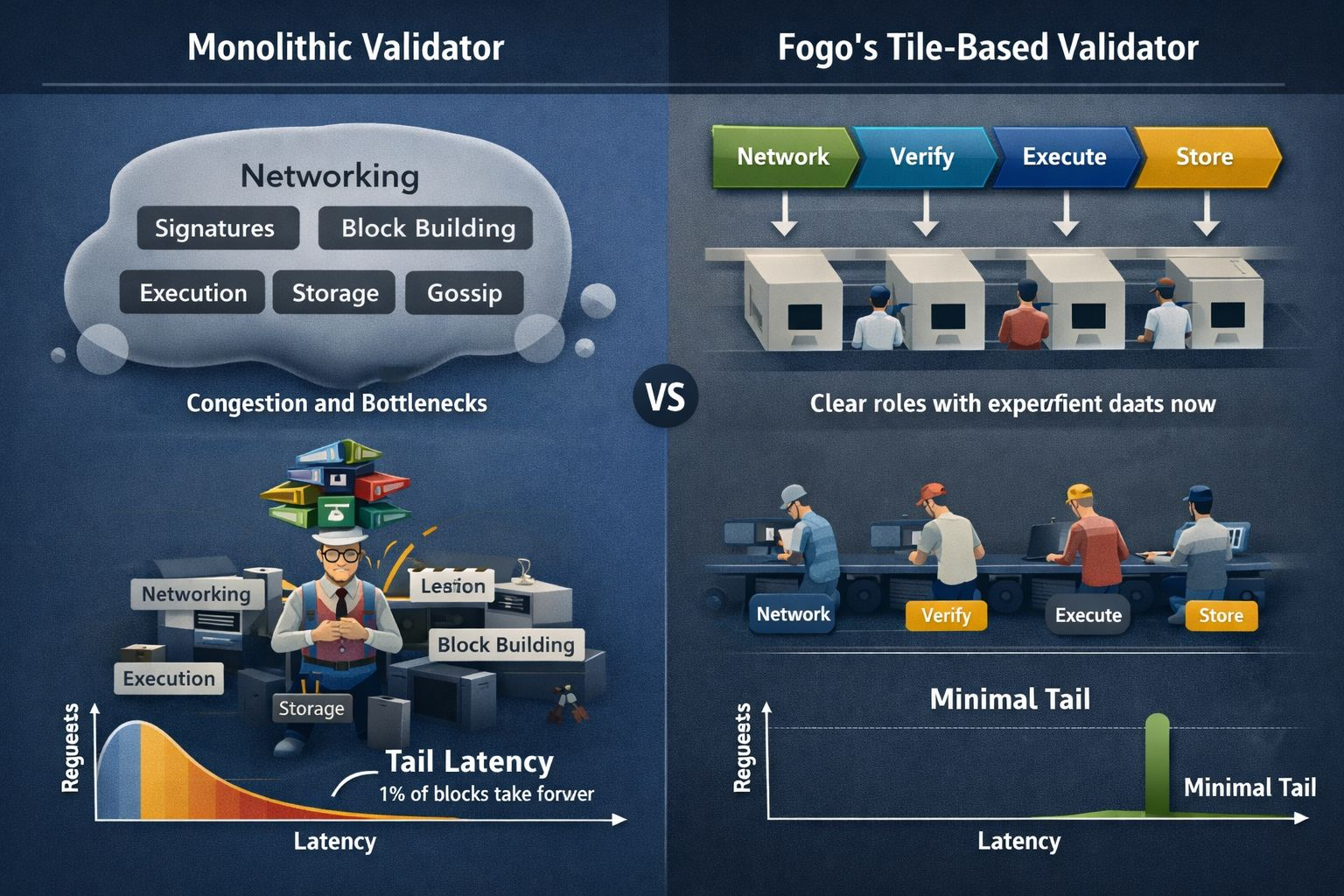

Most chains fail here for boring reasons. They build a validator like a giant blob program. One process does all the jobs.

Networking, signature checks, block building, execution, storage, gossip. It’s like hiring one person to be the cashier, cook, cleaner, and security guard. Sure, it works at 2 customers a day.

At 2,000, it turns into chaos. Context switches. Cache misses. Lock contention. Tail latency. That last one matters most.

Tail latency means the slowest slice of time. Not your average. The ugly end of the curve. The “1% of blocks that take forever.”

In distributed systems, that tail becomes your user experience. Because a single slow validator can drag the whole network’s rhythm.

You can have 99 fast nodes and still feel slow if the system needs the 100th to behave.

So Fogo leans into a different idea: decompose the validator. Break it into tiles. A tile is a tight worker that does one job well, on purpose.

Think assembly line, not Swiss Army knife. Each tile has a clear role. Clear inputs. Clear outputs. Less shared state. Less surprise.

That tile-based approach is not a cosmetic refactor. It’s an attack on jitter. Jitter is those random delays that show up even when nothing “looks” wrong.

The OS scheduler moves threads around. CPU caches get cold. Memory access gets weird. Interrupts pile up.

The system is “fine” until it isn’t. High-frequency systems hate that. They want boring. Predictable.

Same work, same time, again and again. In a tile design, you can pin work to cores. You can keep hot data hot. You can reduce the number of times data bounces across the machine.

You can keep the packet path short. And you can stop one noisy job from stepping on the toes of another. A packet hits the node.

One tile handles network intake. Another tile validates signatures.

Another tile does scheduling.

Another tile pushes execution.

Another tile handles storage writes.

They pass messages like relay runners. Baton moves forward. No one tries to run the whole race alone. And yes, the details matter. Signature checks are heavy.

They chew CPU. If you let them share a thread pool with networking, you’ll drop packets under load. Then you retry.

Then latency spikes. Then people call it “congestion” like it’s weather. It’s not weather. It’s design.

Same for execution. Execution wants compute and fast memory. Storage wants durable writes and careful ordering.

If you make them fight in one big process, you get lock wars.

If you isolate them into tiles, you can manage the handoff.

You can backpressure. You can measure each stage like a factory manager who actually walks the floor.

Backpressure is a fancy term for “slow down upstream so you don’t choke downstream.” In normal apps, you can fake it.

In chains, you can’t. If the node keeps gulping data when the executor is behind, you get queues. Queues create delay. Delay creates timeouts.

Timeouts create retries. Retries create load. Load creates more delay. It’s a dumb loop, and it’s common.

Tile-based design gives you dials. You can tune queue sizes. You can cap work per stage. You can keep the system stable under stress instead of heroic and fragile.

It means Fogo is aiming for a chain that behaves less like a monolith and more like a network appliance. A purpose-built box.

If you’ve ever seen how real exchanges build matching engines, or how low-latency firms do packet capture, it’s that mindset.

Tight loops. Minimal branching. Clear ownership of resources. No magical thinking.

And it also means the ecosystem story changes. High-frequency apps don’t just need cheap fees. They need predictable execution and predictable timing.

Developers can work around high fees. They can’t work around random pauses. A game can’t explain to a player that the validator’s garbage collector woke up. An order book can’t say “finality is taking a little nap.”

Most “global computer” talk is marketing. It sells a feeling. But high-frequency is not a feeling. It’s engineering.

Fogo’s tile idea, if they execute it well, is at least pointing at the right enemy: variance.

If they don’t, it’s just another fast-in-a-lab claim. I’m not interested in lab claims.

I’m interested in behavior under load, during spikes, when the network is noisy, when nodes are uneven, when real users pile in. That’s where designs either hold or fold.

So yeah. Decomposing the validator is not sexy. It’s plumbing. It’s the kind of work that rarely trends.

But the chains that win serious usage tend to win on plumbing. Because users don’t worship architecture. They just want the spinner to stop.

🚨 Not Financial Advice.🚨